The robots.txt file has a very significant role to play in the overall SEO of your website. This file resides on your server and tells the search engines which part of the site should be indexed and crawled by search engines. Basically, it contains a set of rules to communicate with the search engines and direct them which part of your site needs to be indexed.

Although most websites have the robots.txt file, not all webmasters are aware of how important this file is and how it can affect the performance of their website.

In today’s tutorial, we will tell you what exactly the robots.txt file is and everything else that you need to know about this file.

What is the WordPress Robots.txt File?

Every website on the internet is visited by a certain type of robot which is a kind of bot.

If you do not know what a bot is, search engines like Google and Bing are the perfect example for you to understand it.

So when these bots crawl around the internet, they help search engines to index and rank billions of websites that exist on the internet.

So these bots actually help your website to be discovered by search engines. However, it does not mean that you want all your pages to be found.

You would especially want your dashboard and the admin area to remain hidden because that is the private area from where you control the front end of your website. Sometimes you may want the entire site to stay hidden from search engines for the very reason that it is still in the development mode and is just not ready to go live.

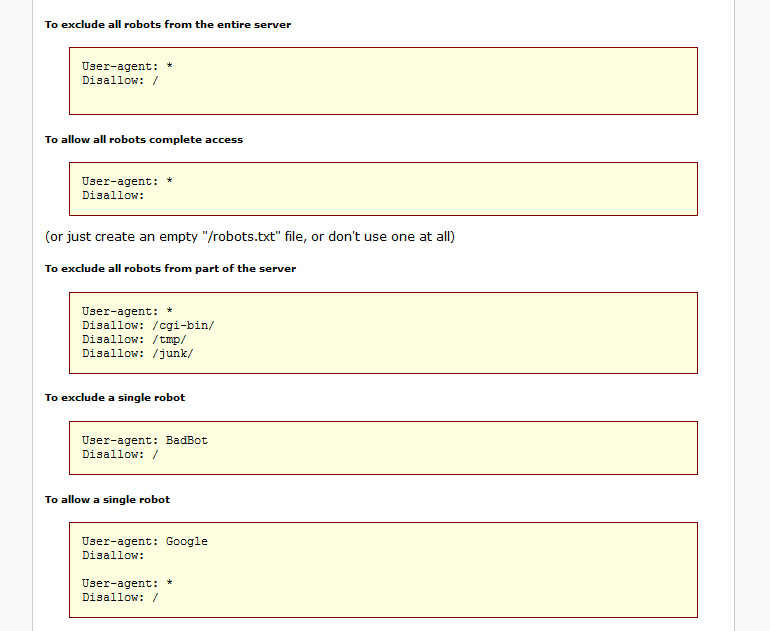

Robots.txt Commands, image from The Web Robots Page

This is where the robots.txt file comes in. This file helps you to have control over how the bots interact with your site. So using this file you can either restrict or entirely block its access to a specific area of your site.

Does Your Site Need This File?

Although search engines won’t stop crawling, in the absence of this file, it is always recommended to have it.

Search Engines will come to this file if you want to submit your XML sitemaps to search engines like Google. You can obviously control it by particularly specifying it to the Google Web Master Tools.

Creating the robot.txt file has two major benefits for your website. Firstly, it helps search engines figure out which pages you want it to crawl and the ones to be ignored. By doing that you make sure that the search engines are focused on the pages that you want it to show your audience.

Secondly, it helps you optimize the research usage by blocking the bots that are unnecessarily wasting your server resources.

If your site is powered by WordPress, you generally do not have to make the extra effort to create the robots.txt file for your site. A virtual robots.txt is automatically created on your WordPress site. But it is still better to have a physical robots.txt file.

Is Robots.txt File A Foolproof Way To control Which Page Is To Be Indexed?

Well, as I have already mentioned, search engines won’t stop crawling your pages in the absence of this file. Creating this file is not a foolproof way of controlling which page you want the search engines to index.

If you want to particularly stop search engines to crawl a certain page, you can use the meta noindex tag to entirely block them.

By using the robots.txt file, you are not telling search engines not to index them. It only prompts them not to crawl those pages. Although Google doesn’t crawl these areas of your site, it might still do so, if some other site links to this part of your site.

Where Is the Robots.txt File Located On Your WordPress site?

You can view this file by connecting to your site by using an FTP client. You can also do it by using the cPanel file manager. This file is generally located in your site’s root folder.

You can open this file by using any plain text editor like the Notepad. This is an ordinary file and needs no special editor to be opened.

You don’t need to worry about this file’s existence on your site. WordPress automatically creates a robot.txt file by default for your website.

If you still doubt it, there is a way that will show you that this file exists on your site. Just add “/robots.txt” to the end of your domain name. It will show you the robots.txt file of your website.

In our case, “www.alienwp.com/robots.txt” shows the robots.txt file that we use here.

You cannot alter the existing file that you will have on your site. But if you want to manipulate it, you will have to create a physical one on your server. The existing one is a virtual one that doesn’t accept any alteration.

How To Create A Robots.txt File?

If in case your site doesn’t have the robots.txt file, it is not very difficult to create one. You can easily do it from your admin panel via the Yoast plugin. Yoast is one of the most amazing SEO plugins that every WordPress site should use. If you are still not using this plugin, go install it now to enhance your SEO.

Once Yoast is installed, you will first have to enable the Yoast advanced features. You can do that by going to SEO>Dashboard>Features>Advanced Settings.

Now go to SEO>Tools>>File Editor.

Here Yoast will assume that you do not have a physical Robots.txt file and considering that, it will give you an option to create one.

Click on the create Robots.txt file option.Once you do that, you will be able to edit the content of this file from the same interface.

How To Create Robots.txt File Without A SEO Plugin?

The above process was a way to create this file using an SEO plugin. But you can create it even if you do not use such a plugin. This can be done via SFTP. Doing this is very easy.

For this, you will first have to create an empty file. Name it as Robots.txt and save it.

In the next step, you will have to connect to your site via SFTP. Kinsta has a guide to how to connect to SFTP. Once you are connected, you will have to upload the file to the root folder of your website. If you want to make any modification to this file, you can do it by editing it via SFTP and uploading the new version of it.

How To Use The Robots.txt File To Block Access To A Specific Page?

You can block a specific file or folder of your website, by using the robots.txt file. Suppose you want to block Google from crawling the entire wp-admin folder and the wp-login.php. The following command will help you do that on your site.

User-agent: * Disallow: /wp-admin/ Allow: /wp-login.php

What to Put In Your Robot.txt File?

When you are creating a robots.txt file for your website, you generally do it with two major commands.

- User-agent – By using the user-agent you can target a specific bot or search engine in simpler words. So your user-agent is different for different search engines. So the user-agent for Google will not be same for Bing.

- Disallow – With this command, you tell search engines not to access certain areas of your website. So search engines do not reach the areas for which this command is used.

Sometimes you might also see the Allow command being used. This is generally used in niche situations. Even if you do not use this command most part of your site comes under this command. This is set by default on your WordPress site.

The above rules are just the basic ones. There are more rules that you need to know about. Here are a few of them.

- Allow – This command explicitly allows search engines to crawl through an entity on your server

- Sitemap – This command tells crawlers where the sitemaps of your site reside

- Host – The host defines your preferred domain for a site that has multiple mirrors

- Crawl-delay – By using this command you can set the time interval search engines should wait between requests to your server

How To Create Different Rules For Different Bots?

The robots.txt file has its own syntax to define rules which are commonly known as the directives. As we have already mentioned before, different bots have different user-agent command. So what if you want to set your Robots.txt file for different bots?

Well, in that case, you will have to add a set of rules under the user-agent declaration for each bot.

In the following command, we will show you how to make one rule for all bots and another specifically for Bing.

User-agent: * Disallow: /wp-admin/ User-agent: Bingbot Disallow: /

By adding the above command you will be able to block all bots from accessing the wp-admin area of your website. The search engine Bing will, however, be blocked from accessing the entire website.

Things To Avoid While Creating Your Robots.txt File

there are certain things that you should avoid doing while creating your Robots.txt file. The first and the most important error committed by many inexperienced web owners is to provide space at the beginning of the command.

The second thing you need to keep in mind is that you cannot and should not change the rules of the commands. The third thing that many people ignore paying attention is the proper use of upper and lower case while writing the command.

Make sure you double check the case of your commands. You cannot write user-Agent or user-agent where it should actually be User-agent. I hope you have figured out the difference in the three terms.

Adding your XML sitemaps To Robots.txt file

If your site is already using an SEO plugin like Yoast, then it will automatically add the commands related to your site’s XML sitemaps to the robots.txt file.

But if your plugin fails to add these commands, you will have to do it manually by yourself. Your plugin will show you the link to your XML Sitemaps. You will have to add it to the robots.txt file yourself.

How To Know That Your Robots.txt File Is Not Affecting Your Content?

Sometimes you may want to check if your content is being affected by your robots.txt file. To check and ensure that no content is affected, you can use the Webmaster Tool called ‘Fetch As Bot Tool’. This tool will allow you to see if your robots.txt file is accessing your content.

For this, you will first have to log in to the Google Webmaster tool. Now go to Diagnostic and Fetch as Google Bot. There you can put your site content and see if you have trouble accessing it.

Final Words

As already mentioned, most WordPress sites have the robots.txt by default. But using the robots.txt file you can have control of the way a specific bot or search engine interacts with a specific part of your website.

It is important you know that the disallow command is not the same as the noindex tag. Search engines might be blocked by using the robots.txt but it cannot stop them from indexing your site. You can manipulate the way search engines interact with your site by adding specific rules.

But it is good that you know which part of your site should be crawled and which part should be denied access. Because Google generally looks at your website as a whole. So if you use these files to block an important part that Google needs to know about, you might just land on some major problems.

For example, if unknowingly you use the robots.txt file to block you styling component. In such a case, Google will consider your site to be of lower quality and might even penalize you.

Mostly the content that you want to put in your robots.txt file depends on your website. These might be your affiliate links, your dashboard area or any other particular area that you think should not be accessed by the bots. You can also do it for your plugins and themes.

We hope this guide was helpful to you. Feel free to leave us a comment below in case you have any further queries. We would love to get back to you.